How Ouroboros Leios will change the Cardano Consensus

Cardano uses PoS consensus, which at the time of writing is capable of processing 300 simple transactions per second. That's almost 50 times more than the PoW consensus that Bitcoin uses. However, for the global financial system, this is still insufficient. The IOG team is working on a new version of PoS which they have named Ouroboros Leios.

The Blockchain Trilemma

The developer team of any blockchain network balance three key characteristics, which are security, decentralization, and scalability. The blockchain trilemma refers to a widely held belief that decentralized networks can only provide two of three benefits at any given time. Security is an unconditional feature that cannot be compromised. Teams balance mainly between decentralization and scalability. However, preferring scalability over decentralization is not the best solution, as decentralization is a key feature of the blockchain industry. Moreover, as decentralization decreases, the Nakamoto coefficient decreases as well so does security.

Creating a blockchain network that achieves high scalability while not sacrificing decentralization and security is still a technological challenge for the blockchain industry. Cardano is currently one of the most decentralized networks, with over a thousand pool operators producing blocks. It is imperative that Cardano maintains its current decentralization and does not close the door to further growth. However, increasing scalability is also essential to the mission Cardano is trying to fulfill.

Ouroboros Leios is a major extension to the current PoS consensus that will significantly increase scalability and maintain the current level of decentralization. Let’s take a look at the details known so far.

Throughput

Blockchain networks use blocks as a collection of transactions and scripts over which a network consensus occurs. From a network perspective, it is not efficient to make decisions at a global level for each individual transaction. It is more efficient to take multiple transactions simultaneously and make a decision (consensus) over the entire collection.

A block is a basic unit used to transition to a new state at the ledger level. If the majority of the network considers the proposed block valid, the new block will be stored in the blockchain forever and will become the starting point for the next state transition.

Two parameters affect the throughput of blockchain networks. They are block size and block time.

Block size defines the maximum size of a block, which affects the number of transactions and scripts that can fit into it. If the block size is 88 kB (90.112 B) and a simple transaction has the size, say 300 B, 300 transactions can fit in the block.

The block time defines the time interval between adding new blocks. Cardano has the block time set to 20 seconds. This means that in 1 minute Cardano can process 900 simple transactions. In 10 minutes, 9000 transactions, etc.

It may happen that if the number of users who want to use the network starts to grow, the network may not be able to insert all new transactions into a new block. Thus, some transactions must wait in the mem-pool for the next block.

For example, this would happen if 400 users wanted to send a simple transaction within 20 seconds, and this would repeat for an hour. 400 transactions are more than the 300 that Cardano can easily handle. Every 20 seconds, 100 transactions are left out of the new block, so they must remain in the mem-pool.

You can think of a mem-pool as a temporary repository of transactions on network nodes. When a block producer becomes a slot leader, i.e. gains the right to create a new block in a given slot, it takes the pre-validated transactions from the mem-pool and puts them into the block. It then propagates the block to the network. With each newly added block, the mem-pool space increases as transactions that are already in the blockchain can be taken out. However, new transactions are coming in all the time.

If the number of transactions is consistently higher than the number the network can handle, it is necessary to prevent the mem-pool from filling up beyond the required limit. The network is forced to reject new transactions. Users must wait longer for the settlement of transactions that are already in the mem-pool. Users who have failed to submit a transaction must try again. Both are user-unfriendly. Users expect that the settlement will be short and that the network will not have throughput problems.

The mem-pool is capable of absorbing short-term peaks, but it does not make sense for it to be many times larger than the block size. If there were enough transactions in the mem-pool for, say, 100 blocks ahead, it would mean that newly submitted transactions would not get into the block for a relatively long time. Ideally, the mem-pool should be as small as possible and the network should scale sufficiently so that transactions don’t stay in the mem-pool just because they don’t fit in a block.

The decentralized network is able to propagate transactions relatively quickly and is theoretically limited only by Internet bandwidth. The Internet has a higher throughput than blockchain networks as blockchains are limited by block size and block time. In other words, blockchain networks are currently unable to use the throughput of the Internet when it comes to the production of blocks. What holds blockchain networks back is a slow consensus which is furthermore limited by the size of the data in a given interval. While transactions could flow through the blockchain network relatively quickly, the only result is that the mem-pool would be filled.

There are seemingly simple solutions such as increasing the block size or decreasing the block time. Both lead to higher throughput as more transactions would fit in a block and a new block would be produced more frequently. Unfortunately, changing these parameters might have a negative impact on security and decentralization. For example, the larger the block, the longer it takes to propagate in the Peer-to-Peer network (increasing delay). The shorter the block size, the greater the risk of forks being created, as the block producer may not receive the last produced block in time (and use the previous one).

As the number of nodes in the network grows, the number of hops through which data must pass in the network can grow. As each node validates the data before sending it (to avoid sending non-valid data over the network), the distribution of data slows down.

How to increase throughput

As we said, currently, blockchain networks do not use most of the communication bandwidth. Most of the time, nodes are just waiting. Respectively, they receive, prevalidate and further distribute new transactions and scripts. Verifying new incoming transactions is not a computationally demanding task for nodes. They only have a little bit more work to do when they receive a new block or are tasked with producing a new block.

If the block time is set to 20 seconds, we can say that the node does minimal work for 19 seconds and validates or creates a new block once every 20 seconds. In terms of network bandwidth usage, it’s similar. There are lots of small transactions being sent across the network all the time, but once every 20 seconds a relatively large block needs to be distributed, which takes a lot longer than with transactions.

To increase scalability, it is necessary to use the computational power of nodes and fully employ them. It does not make sense to use the computational power and the bandwidth of the network only when a new block is to be produced. Simply put, the longer the block time, the less the computing power and the bandwidth of the network are used.

Do not confuse the PoW task, which is mainly about drawing the next block producer and securing against history overwriting, with transaction validation. In the context of Bitcoin scalability, the network also basically sits idle for 10 minutes while miners try to resolve the computationally intensive cryptographic problem.

So, it is necessary to use the available resources. Validated transactions and scripts should be accepted and stored in the blockchain as soon as possible. In other words, it is necessary to get the validation results into the block as soon as possible, regardless of their quantity.

What Cardano can change through decentralization

Many newcomers to cryptocurrencies are unclear about what exactly decentralization is and, more importantly, what it can change. This article will look at how the Cardano protocol is decentralized and then discuss what all can change in our society through decentralization. Read more

How to increase throughput while maintaining security and decentralization? There must be no fundamental change in the number of nodes in the network. The network must remain open so that a new node can join at any time.

The solution must be independent of the block size and block time parameters. It is necessary to utilize the whole throughput of the communication link to be able to quickly process as many new transactions and scripts as possible. Nodes can make more use of their computing power while waiting for a new block to be created. The IOG team found a solution.

It is necessary to separate transaction validation and script execution from block production. Ouroboros Leios splits the tasks related to validation and block production into two groups of nodes. However, each node can perform both tasks.

Input endorsers

Cardano splits time into slots. The length of one slot is one second. The protocol is set up so that approximately every 20 seconds, one randomly selected node becomes the slot leader. The slot leader gets the right to insert transactions into a new block and publish it. Stake size affects the frequency with which a node can become a slot leader. The selected slot leader is also a block producer if it uses the right and produces a new block.

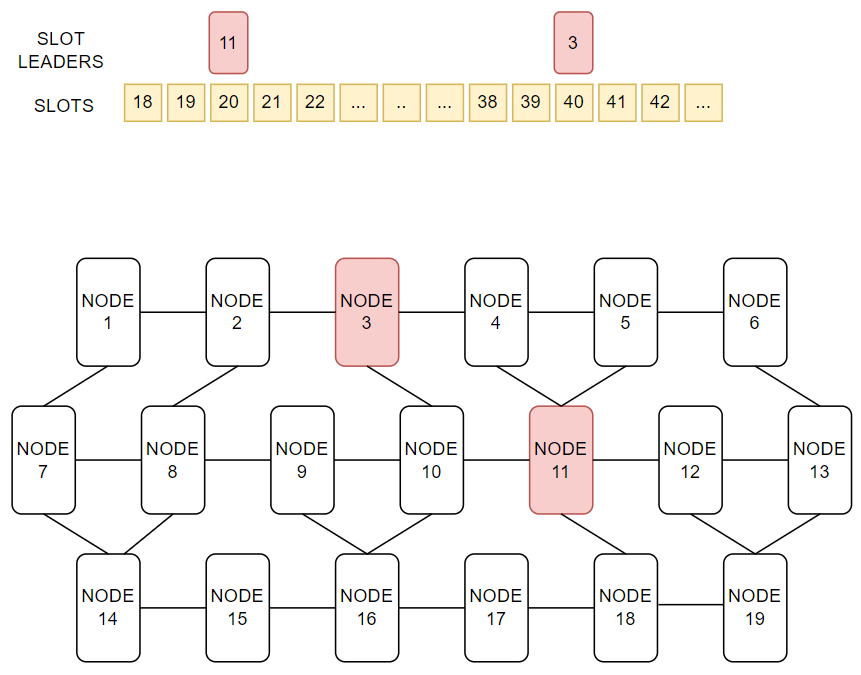

In the picture below you can see the time divided into slots. In slots 20 and 40 new slot leaders are drawn, these are the nodes marked 11 and 3. These nodes can produce a new block and publish it.

There are entities in the network (stake pool operators) that are trusted by delegators and this can be exploited. In addition to block producers, there will be a second group called input endorsers. Thus, within a slot, it is possible to randomly select not only slot leaders but also one input endorser (theoretically multiple input endorsers).

The role of slot leaders is unchanged and they are tasked with producing a new block. Input endorsers are tasked with endorsing the input that should go into the block. We will talk about the content of the endorsed input later. Input endorsers can be thought of as a second layer that will preprocess transactions and scripts before they are inserted into the block by a randomly selected slot leader.

Input endorsers will be randomly selected by a mechanism similar to the way slot leaders are selected. The difference is that input endorsers can be selected in each individual slot (one input endorser per second) before the slot leader is selected (approximately every 20 seconds). Input prepared by an input endorser will be accepted by the slot leader only if it is cryptographically verified that the input endorser has obtained the right to prepare the input.

In between two blocks, multiple input endorsers will be randomly selected to prepare inputs. Once a slot leader is chosen, there will be multiple endorsed inputs that can be inserted into the block. Instead of the slot leader inserting selected transactions and scripts into the block, as it does now, it will insert references to endorsed inputs into the block.

Blocks and endorsed inputs are distributed independently in the network. Each block can contain 0 to N endorsed inputs.

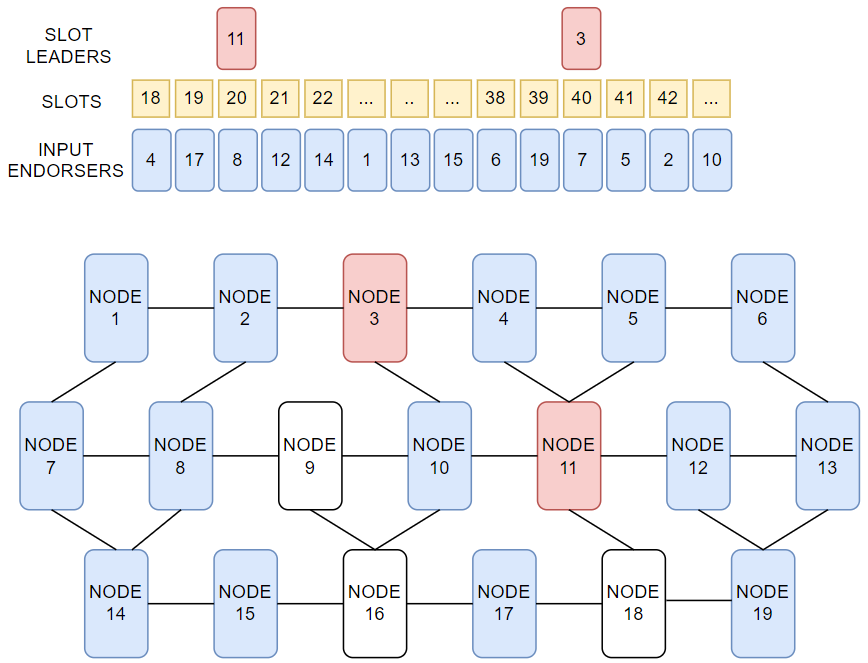

In the picture below you can see the time divided into slots again. Approximately every 20 seconds a new slot leader is drawn. In addition, an input endorser is drawn in each slot and it is given the right to produce a new input. Thus, two draws take place in parallel at the same time.

Input endorsing is only possible because Cardano uses the Extended-UTXO model. It is possible to validate transactions and scripts locally regardless of the surrounding environment (no global state). Transaction validation and script execution happen locally at the level of input endorsers, i.e. outside the main chain that remains under the control of slot leaders. The responsibility for searching for double-spend conflicts remains with the selected slot leaders. However, it becomes a lightweight process that needs only to rely on detecting and resolving conflicts in the UTXO graph.

The same transaction can be contained in multiple endorsed inputs. This can happen if the input endorser does not know what the previous inputs look like. In case a transaction is multiply present in the blockchain its first occurrence only will be its canonical position in the ledger.

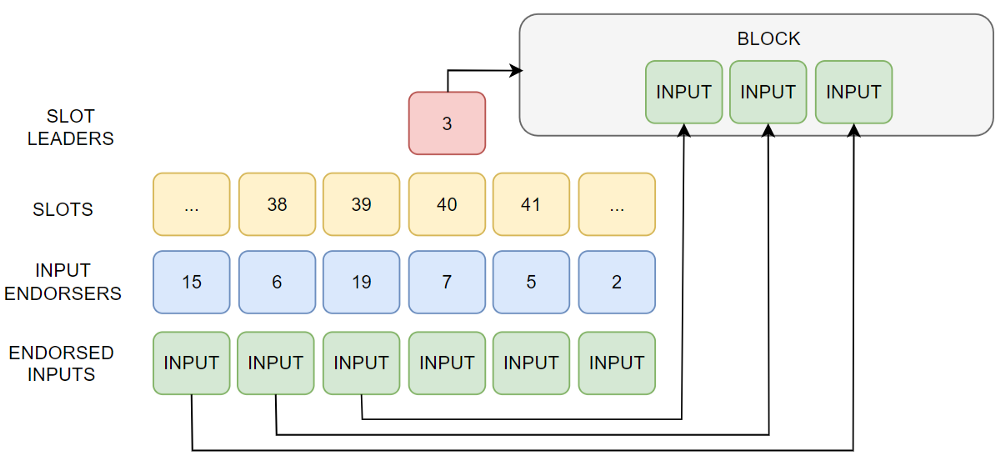

In the picture below you can see how a new endorsed input is created every second. As soon as a new slot leader is drawn, there will be more endorsed inputs that are ready to be used. The slot leader performs its validation process and can insert all the selected inputs into the new block.

Let’s see what happens from submitting the transaction to inserting it into the block.

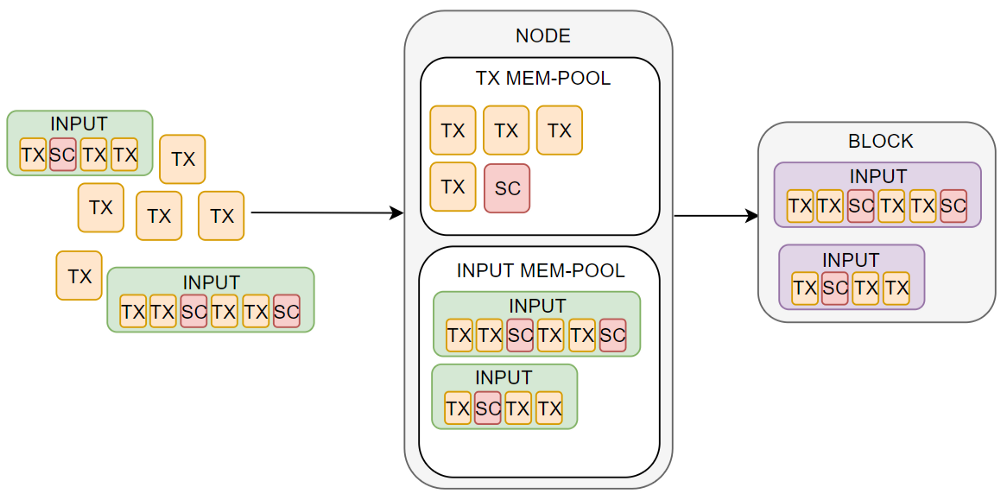

When a node receives a new transaction it validates it. All valid transactions will be inserted into the mem-pool. If a node gets the right to create an endorsed input in a given slot, it will include the pre-validated transactions in the new input. A batch of transactions will be created. You can think of this as a new kind of block. Endorsed inputs (blocks) are distributed in the Peer-to-Peer network. In addition to the mem-pool for transactions, there will be a mem-pool for the endorsed inputs. Nodes will keep multiple endorsed inputs in the dedicated mem-pool in order to be ready for producing a new block in the case they would become a slot leader.

Nodes not only store endorsed inputs but locally prove their validity via Mithril certificates, which are cryptographic proofs of validity to groups of transactions.

Once a new slot leader is selected, it selects the endorsed inputs from the mem-pool and verifies that no double-spend has occurred. It then inserts the references of all selected endorsed inputs into the new block. The new block is then distributed to the network and validation by other nodes is performed in a similar way as it is done now. During the distribution of the new block, nodes can take out the used endorsed inputs from their mem-pools.

In the figure below you can see how a node receives new transactions and also new endorsed inputs from the Peer-to-Peer network. Both are stored in dedicated mem-pools. The node got lucky and was elected as slot leader, so it produced a new block.

Note that this concept delegates validation of transactions and script execution to all nodes and does not burden the node with this work at the time it is elected as slot leader. It allows using the computational power of nodes during the “idle” time when all nodes wait for a new slot leader.

Transactions and scripts are processed by the network essentially all the time so creating a new block is a relatively quick process. Because references to endorsed inputs are used, the block size can remain the same as it is today, even though the volume of data processed can be many times larger. In other words, each endorsed input can contain, say, 1000 transactions. Thus, the distribution of the block will not be slowed down due to size. At the same time, however, nodes have access to all necessary data that is referenced in the new block.

This concept can resemble L2 solutions like ZK Rollups and Optimistic Rollups, or other solutions like Lightning Network. However, the advantage is that there is no second network with its own infrastructure. Everything will be under the control of the current staking pool operators within the Cardano network. Users will not notice the change except for better performance. There is no need to use bridges or different addresses so the user experience will not be affected in a negative way.

Conclusion

At the time of writing, all fans are eagerly awaiting more details from the IOG teams. Probably the biggest question for us is where and how transactions and scripts will be stored, as the block will only reference the data. Because Cardano will scale much better, it will also generate more data that will need to be stored somewhere. The ideal solution would be through sharding so that nodes don’t have to store all the data, but only part of it. Let’s see how the IOG team solves this.

It is premature to claim that the blockchain trilemma is a solved problem. However, Ouroboros Leios will be a significant step forward as network throughput will not be limited by block size and block time parameters. It is unknown at this time how high the scalability of Cardano will be, however, it is more than likely that we will be moving up at least an order of magnitude. Previously, it was said that blockchain is a slow database. Ouroboros Leios invalidates this argument and may rewrite the history of network consensus.