Blockchain scalability and Cardano approach to it

The public blockchain struggles with adoption. Higher adoption is critically dependent on the ability of the protocol to scale more. Let’s explain exactly what scalability is and look at the differences between PoS and PoW.

How to define scalability

The standard Wiki definition might sound like this:

Scalability is the property of any system to handle a growing amount of work by adding more resources to the system.

Scalability is the ability to handle multiple requests as the user base grows. User growth often reaches the point where resources need to be increased to maintain the quality of service at the expected level. Increasing scalability often entails extra costs.

Few services or protocols are built from the outset for mass adoption. The success of the service is difficult to predict in advance. Therefore, the number of users is only estimated in advance and the service starts. As the service becomes more popular, users may see a slowdown in response, for example. This is because the number of users is growing and existing hardware is overloaded and does not manage to handle new user requests.

Let’s try one analogy from the real world. Imagine a clerk at the post office. The clerk can handle 10 customers per hour. With eight-hour working time, it is 80 customers a day. If 50 people a day come to the post office, the clerk will be fine with the job. Once 100 people come a day, the clerk will hardly stop. However, as soon as 200 people a day visit the post office, the clerk cannot handle the onslaught of work. Customers will not be satisfied and the clerk will probably get sick.

You can think of a clerk as a network capacity that can handle a certain number of users per day. It is a post-office capacity in the real world. Think of customers as transactions. Thus, network performance can be measured in terms of the number of transactions handled over a period of time, such as transactions per second (TPS). In our analogy, this would be the number of customers per day (CPD).

In the traditional world of centralized network services (client-server), hardware can scale vertically and horizontally. Vertical scaling means adding more computing power and memory to the system. Horizontal scaling involves adding new nodes to the system that will do the same job. You can scale vertically and horizontally simultaneously.

In our analogy, we can help the clerk by buying a faster computer, using a faster database, or replacing a traditional typewriter with a computer. That would be vertical scaling. Or we can scale horizontally, which would involve the employment of another clerk. In the case of horizontal scaling, one clerk would be able to handle 20 more customers per day and would reach maybe 100 satisfied customers per day. In the case of vertical scaling, two clerks could handle 160 customers per day, three clerks 240 customers, and so on.

Blockchain scaling issues

Blockchain is built on a distributed network that requires a majority consensus to change data. This greatly complicates scalability, as the decision must ideally be made in several places at a given small period of time.

Let’s go back to our analogy. Imagine that all clerks have to meet at the end of the day to see each other and mutually validate the work they have done for customers. Everyone confirms or rejects each document. In the case of 2 clerks, each clerk would have to inspect and approve 80 documents to her colleague in addition to her work. Imagine that one mistake in the documents would result in the rejection of the whole day’s work (analogous to a block of transactions). Now imagine that these clerks do not sit in one office, but have to send documents to each other and then send a reply. Work would be very slow and adding a new clerk would only increase complexity.

Adding a new node to the network or increasing its performance may not affect the network throughput at all. Performance may even deteriorate as a consensus between multiple entities will have to be made, which is always more challenging. Nodes that participate in consensus are distributed globally and need data to make a decision. It takes time to distribute data across the globe. The protocol must be designed to minimize communication requirements.

In the case of consensual distributed networks, network capability and data transfer speed are a big limitation. Reasonably, only a few MB in tens of seconds can be distributed across the globe. At the same time, the larger the block of data, the slower it propagates and the overall network speed in a given design may be limited by the slowest node (farthest or with a poor internet connection). If the network is to be fast (to have fast transaction and block finality), it is necessary to try to reduce block time.

Suppose a regular transaction can have 250 bytes. Thus, up to 1 MB can hold about 4,000 transactions. If the block time is 10 minutes and we convert it into transactions per second, we get to 6.66 TPS. If the block time is 20 seconds, we get 200 TPS at the same block size.

Block time has a direct effect on the desired data storage location. The lower the block time, the faster the storage is filled. If the 1 MB blocks were always full, at block time 10 minutes the blockchain would grow by about 144 MB per day. For a 20 second block time, this would be 4,320 MB per day. Obviously, not everyone will be able to store such large and rapidly increasing amounts of data. It is, therefore, necessary to take action to reduce the high demands on storage. The following methods may be considered: data pruning, subscriptions, compression, partitioning, sidechains, sharding.

Some consensus protocols work by voting to add a block within a closed group. One random node proposes a block and the rest of nodes (included the proposer) are interested in what others think about the proposed block and, based on the results, add or do not add the block to the blockchain. Complexity increases with the number of entities. It is, therefore, necessary to set the upper limit of the number of entities involved in the consensus. One of the other approaches is the concept of one randomly chosen block proposer and the others as the approvers. There is no complicated communication between nodes and therefore the number of nodes is not limited. There are also other approaches and some do not even work with the concept of blocks. The blocks are very suitable for internal protocol synchronization, but they are not a condition for building a consensual distributed network.

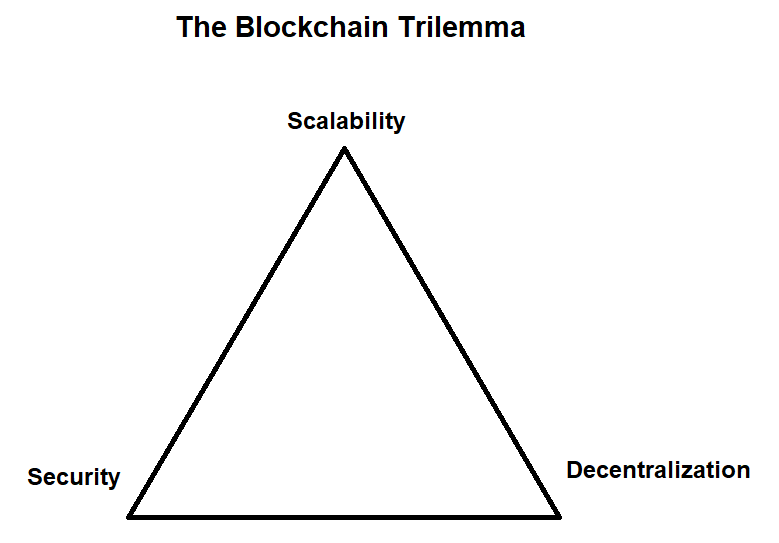

Blockchain trilemma

The famous blockchain trilemma is one of the biggest hurdles for cryptocurrencies. The trilemma is basically a triple of properties by which we can judge the quality of a distributed network. These features are the scalability discussed in this article, the degree of decentralization, and security.

In the context of distributed networks, and especially in the case of blockchain, projects often use the concept that one randomly selected entity proposes a block of transactions and all other nodes serve as a control body (as we mentioned above). The right to create a block randomly rotates between nodes or pools.

The degree of decentralization can thus be judged by the number of entities that can acquire the right to select transactions and put them in a new block. Furthermore, it is possible to judge the number of nodes that only validate these blocks and put them in their blockchain. It should be noted that the right to create a new block is much stronger than the right to validate proposed blocks, as the block proponents actively produce new blocks, while validators only monitor this activity and cannot quickly create a block in case of a problem.

To consider a protocol as a secure one, a protocol needs to be resilient in the short term and immutable in the long term. The protocol has to be able to prevent and be able to recover from short-term attacks without making changes to previous states of the distributed ledger. The ledger must stay immutable.

How to scale a project

As you can see, the project team can not only improve scalability but must also remain strong in terms of decentralization and security. The team has to balance these qualities well. This makes it very difficult to improve scalability. The team must always look to maintain a high level of decentralization and security. However, these properties may be mutually limiting. The design of the protocol must not overly favor one property at the expense of the others. There must be room for future growth and improvement. This is one of the reasons why there may be more projects with different characteristics in the future. One will be super safe, the other will scale well and at the same time will be solid safe. There can be many problems, and users choose the best project available to solve the problem. Conversely, it does not work. People will not use the network for a problem unless it is appropriate and there is a more suitable alternative.

Hydra: Cardano scalability solution

In the future, most transactions will be processed by second-layers solutions. The first layers might not be able to satisfy high transaction demand. Cardano has the second layer solution and its name is Hydra. Read more

Efforts to increase scalability often result in reduced decentralization. A project with low decentralization can hardly be considered secure. Decentralization is a key feature in distributed networks. The right to produce blocks should not be fixed to a low number, as it can be seen in DPoS projects (e.g: 21 in EOS, 26 in Tron). Or, to put it another way, this may be the case, but the question is how much the system can be considered as a decentralized one.

Some projects do not have a limited number of block producers, but the network may tend to be centralized by uneven, not driven, or somehow limited delegating power to an entity (mostly a pool). This can be seen in PoW and PoS projects. If networks work with the pool concept, that is, with a pool to which other network consensus participants delegate power through hash-rates or coins, it is important that projects somehow cope with the growing power of pools. If this is not the case, some pools can gain a large share of power, and this is unwanted from the point of view of decentralization. We can say that the pool can be saturated with power.

We have one more aspect to play. To get a high throughput, the network needs powerful hardware and it must be used wisely. If the number of nodes is fixed, as is the case with DPoS, there is pressure to make the nodes very powerful. Nodes are thus financially affordable to a limited group of people and the network may tend to increase centralization. From a decentralization point of view, it is clever to address the scaling of the network in a way that by increasing the number of average nodes the ability to handle more transactions is also increased. As the network becomes more used, it is natural to scale through the addition of hardware. Decentralization must not be sacrificed. A well-designed distributed network should thus be made up of a large number of independent and affordable nodes while being able to increase transaction throughput. This is a big challenge.

PoW and PoS are very similar systems in the sense that people delegate power to selected pools. Pool owners themselves have their own hash-rates or coins, so they can maintain their position through their wealth. In general, if you have power, you can easily strengthen your position. In the case of distributed networks, this is the case as pool operators take commissions for their service.

Adding a full node to a PoW network does not increase the ability to handle more transactions. Most full nodes only validate blocks that pools produce. However, by adding new ASIC miner the network becomes more secure in terms of hash-rate attacks. Adding a full node or ASIC miner only increases the security of consensus. Decentralization or scalability is not increased.

As for the economic model, only people who purchase ASIC miners can participate in consensus and network security and are rewarded. Full node owners are not financially rewarded by the network. The motivation to get a full node is merely a desire for greater financial freedom. However, this is a good motivation and many people do it.

In PoW, most of the value of new coins go to cover the cost of mining. Today, mining is centralized in large halls and domestic miners are rare. The disadvantage of PoW is that a pool operator is economically motivated to increase its share of power, because the greater the pool hash-rate is, the more rewards it gets. There is no upper pool size limit. Since the hash-rate can constantly grow, it is difficult to define this limit. At PoW, it is very expensive to come up with a new pool and get a 5% share of the total hash-rate.

As you probably know, PoW is very secure because of having to find the right hash that takes on average 10 minutes. We can only consider increasing the block size, but there are options limited by network latency and consensus fairness. Thus, scaling on the first layer is almost impossible for PoW networks. At the same time, decentralization is not too high thanks to the existence of large pools and no mechanism to decrease their power.

In Cardano PoS networks, the network is owned by coin holders — stakeholders. This has advantages both in terms of decentralization and in terms of scalability. The network is decentralized by all stakeholders, so the more the distribution of coins increases, the more the network consensus will be decentralized. And because the consensus rewards not only pool owners but also all coin owners who delegate them to a selected pool, people are economically motivated to acquire and hold coins. Owners of full nodes are also rewarded by the network for their participation in the consensus if they delegate ADA coins to a pool.

There is nearly no need to pay for energy in the PoS, so if the network is useful and handles a large number of transactions, the network will easily make a living from the charges. In addition, there will also be enough funding to reward stakeholders. Holding coins can be very convenient.

To avoid the problem of creating only a few large pools, there is a concept of pool saturation. Saturation is the ability of a protocol to define the required (not forced) number of pools and to economically motivate that requirement. If the request is set to 1000, the system will work economically best if there are 1000 pools of equal size. People can actively choose a pool according to the pool operator’s fee, its reputation, operator activity in the community, size, saturation, and other parameters.

One entity will be able to buy large amounts of coins and set up multiple pools through its own wealth (without the need to rely on delegators and their coins). This cannot be prevented and we will see it in practice. However, thanks to the saturation concept, it will be relatively easy to create a new pool. For example, only a thousandth of the number of staked coins will be enough to set up a new pool at full strength.

As you will see in more detail below, in terms of scalability, the protocol is designed in the first phase in a way that the network gives the right to produce a new block one of the 1000 pools. The block is then only propagated to other nodes that validate it. The block distribution thus approves the block across the network. There is no complicated debate between nodes over block acceptance. It’s actually very similar to the PoW system. The only difference is that the Cardano network itself decides who will be in charge of block production (determining the slot leader). This can reduce block time to seconds (20 seconds in Shelley test-net). The saturation point setting can be changed and can easily be increased. It is possible to set it to 2000 or 5000, so it will be economically advantageous to spot more pools. This can give the system the necessary computing power. Moreover, with this high number of independent entities, sharding may be involved and decentralization will not be sacrificed.

Sharding is the ability of a protocol to come to a distributed parallel consensus. Imagine, for example, that the network is divided into multiple shards (pieces) in which transactions will be approved and, finally, the network makes sure that all the shards have reached an honest consensus. Sharding can be an elegant first-layer scalability solution. Sharding will be described in our other article.

Cardano consensus

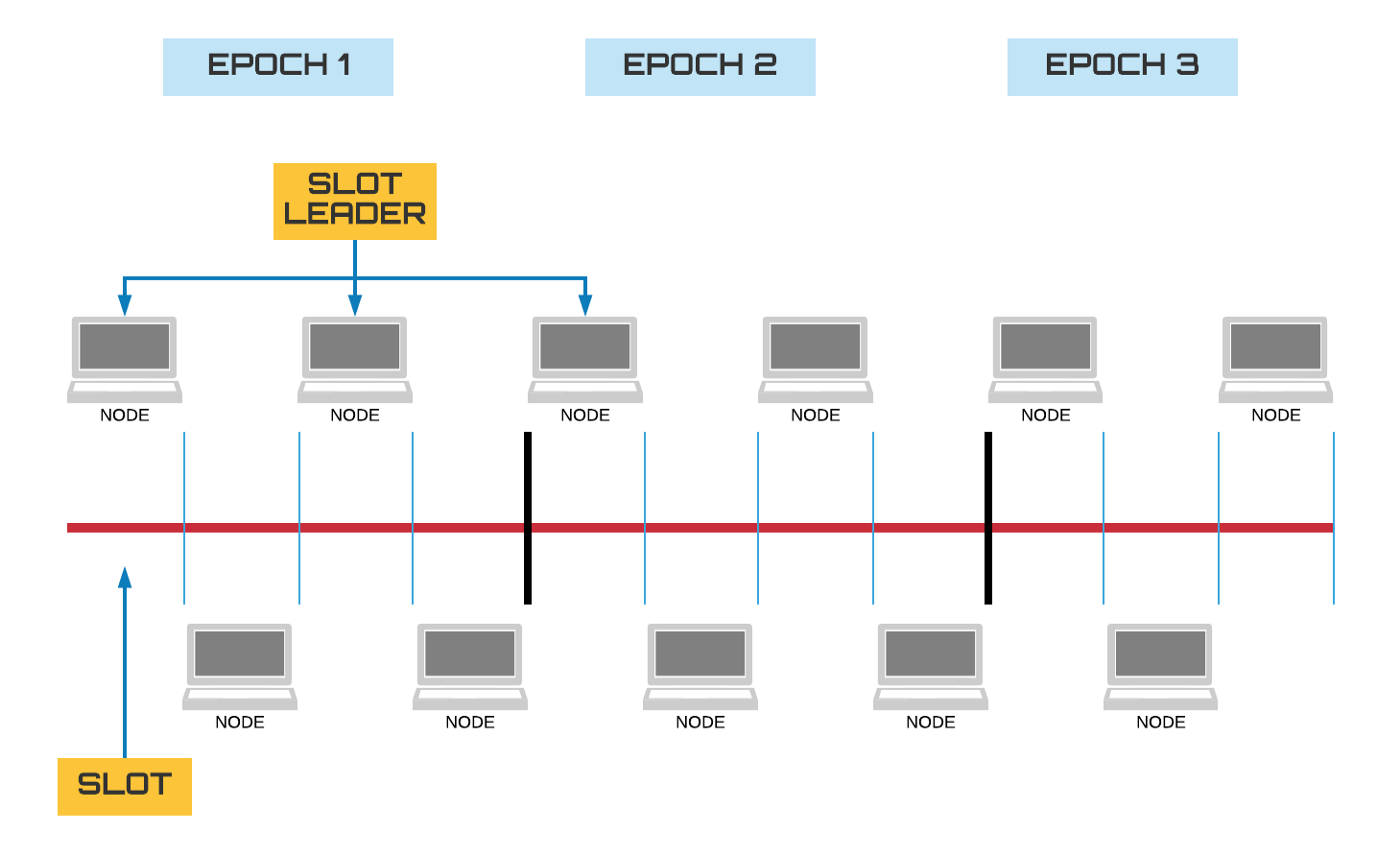

In Cardano PoS, we can observe the following consensus sequence:

- At the beginning of each epoch, the network decides who will be slot leaders. The selection is based on staked ADA coins. The network points to some Lovelace that determines a given pool (the pool for which the Lovelace is staked). The process is done in advance.

- The last block has just been added. The network shifts to another slot so a new node can produce a block.

- Nodes ask if they acquired the right to produce a block in a given slot. If it is the case the node gets proof of winning the right.

- The selected pool (slot leader) can produce a block and sign it in a way that the rest of the nodes are able to accept it. The selected node can fail to produce a block so the slot might stay empty. No other node is able to produce a valid block in a given slot since it is not able to sign it properly.

- If the selected node produced the block it distributes it to other nodes. The proof of winning the right for block production is included in the block.

- Other nodes receive the block and can validate that the block was produced by the correct node and based on that can decide whether the block will be included in the blockchain.

- The process continues from step 2 in a given epoch.

As you can see, unlike PoW, during the block production process, no time is lost in determining who will extract the block. Everything is clear ahead in the epoch, so the pool only finds out if he was given the right to produce the block in that slot, and if so, he will do it immediately. Block is then distributed, which is the phase of its acceptance by the network. Due to the short block time, it does not mind that any node does not take its right to produce the block.

Summary

Cardano will work on its scalability within the Ouroboros Hydra consensus. However, it may be necessary to build second layers so that we can scale globally for a billion users. However, decentralization still needs to be looked at in the second layer. The second layers are good for frequent transactions between two users. It is impossible to build a decentralized future on a centralized infrastructure. It won’t work. Decentralization is important not only as a defense against the enemy from the outside but also against the enemy within the system. Excessive enemy power from within the network can mean collecting user data, raising fees, or censoring transactions. It can be a single point of failure or an interesting point for attacking. The best solution to these problems is decentralization, nothing else.

Decentralization, hand in hand with scaling, can bring new people to the blockchain. User-friendliness, fast and cheap transactions are required for higher adoption. People are used to centralized systems that offer speed and ease of use. Blockchain must do this too, otherwise, people won’t use it as an alternative to the current financial systems.